GA4 Data Extraction to BigQuery

Challenge: How to backfill BigQuery with historical GA4 data?

Solution: Utilize the google.analytics.data_v1beta API to retrieve GA4 data and subsequently store it in BigQuery tables.

Part 1 of the ‘Mastering GA4 with BigQuery’ Series

Introduction

The transition from Universal Google Analytics to Google Analytics 4 (GA4) has brought along a new wave of powerful features. One of the most interesting capabilities of GA4 is its seamless integration with Google BigQuery. This provides businesses with the opportunity to harness the power of raw data, enabling custom queries and facilitating the transfer of data to Business Intelligence tools for advanced analytics and visualizations.

However, a significant hurdle many businesses face is the absence of a feature to backfill historical GA4 data into BigQuery. With the release of our Python tool on GitHub, we aim to bridge this gap, allowing companies to extract, transform, and load their GA4 data into BigQuery with ease.

Our Solution: Python Script for GA4 to BigQuery Data Migration

The provided Python script leverages the google.analytics.data_v1beta API to fetch vital metrics like events, conversions, and active users from GA4. Once extracted, the data is then seamlessly stored in a designated BigQuery database.

For those looking to seamlessly extract GA4 data and integrate it with BigQuery, we have created a dedicated GitHub repository containing a Python script to facilitate this process. The repository is a result of addressing the common challenge of backfilling BigQuery with historical GA4 data.

The repository also includes an extensive README that dives deep into the technicalities of the integration process.

Interested in exploring further? Dive into the repository and get started: https://github.com/createit-dev/310-GA4-BigQuery-Integration

Key Features:

- Configurable extraction: By utilizing the config.json file, users can easily specify parameters, such as the property ID, dataset ID, table prefixes, and date ranges, to customize their data extraction process.

- Efficient data checks: The script ensures that duplicates are avoided by checking BigQuery for existing records before inserting new data.

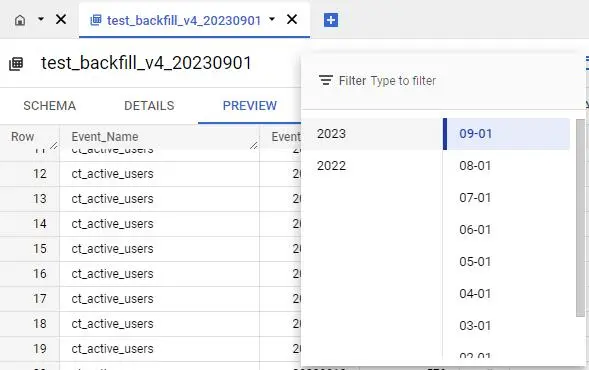

- Dynamic table creation: Depending on the data’s timestamp, the script creates monthly BigQuery tables, ensuring data organization and efficient querying.

- Command-line arguments: Users can perform an initial wide date range fetch or target data from just the previous day, offering flexibility in data extraction.

- Data output: Apart from saving data to BigQuery, the script also outputs the fetched data into a CSV file, providing an additional layer of accessibility.

Technical Details

- Dependencies: The script is dependent on several libraries, notably the Google Analytics Data API client and the Google Cloud BigQuery client.

- Authentication: OAuth2.0 authentication is implemented using service account credentials and client secrets, ensuring secure data access and transfer.

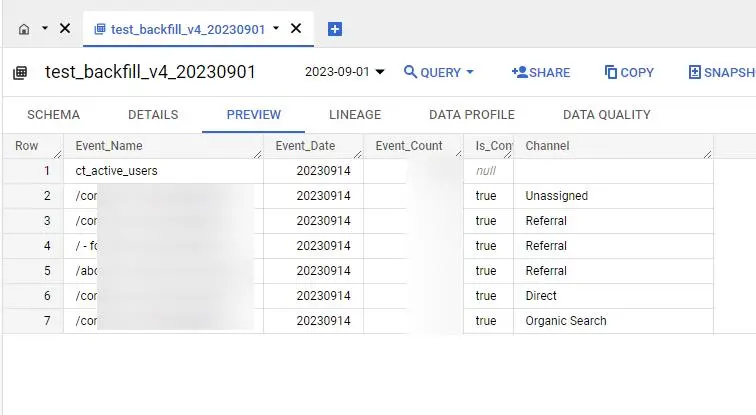

- Data Structure: The script structures data into key metrics, namely Event Name, Event Date, Event Count, Conversion Flag, and Channel, making it easier for businesses to analyze their GA4 data.

Significance of the Tool

- Historical data: Businesses can now tap into their rich historical data from GA4, ensuring that insights from past trends and patterns aren’t lost in the transition to BigQuery.

- Data consolidation: By centralizing GA4 data within BigQuery, businesses can achieve more comprehensive analytics by combining it with other datasets.

- Custom analytics: The tool paves the way for creating custom reports, dashboards, and analytics, moving beyond the standard offerings of GA4.

- Automation: With this script as the foundation, businesses can build automated data pipelines, ensuring that their BigQuery datasets remain up-to-date with the latest GA4 insights.

Using the Python Script

1. Setup & prerequisites:

- Ensure you have Python installed on your system.

- Install the necessary libraries using pip: google.analytics.data_v1beta, google.oauth2, google.cloud, and others.

- Set up a service account in the Google Cloud Console and download the JSON key. This key will be used for authentication.

2. Configuration:

- Edit the config.json file to include:

- CLIENT_SECRET_FILE: Path to your client secrets file for OAuth2.0 authentication.

- TABLE_PREFIX, PROPERTY_ID, DATASET_ID, INITIAL_FETCH_FROM_DATE, and SERVICE_ACCOUNT_FILE: Relevant information about your GA4 setup and BigQuery dataset.

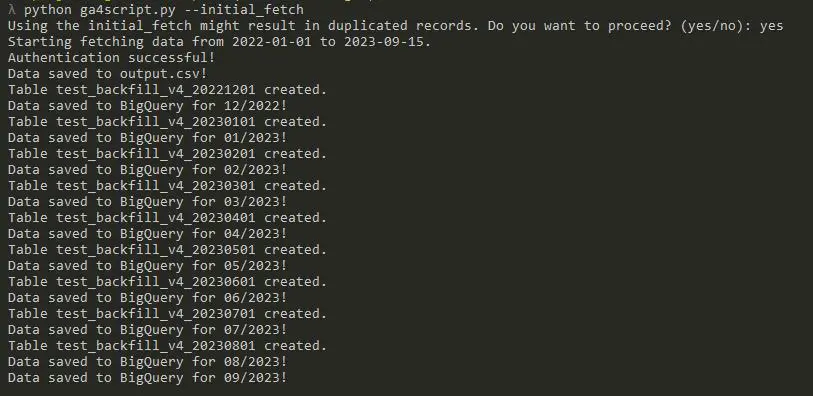

3. Running the Script:

- To execute the script, navigate to the directory containing the script and use the command: python ga4script.py [arguments]

- Available arguments:

- –yesterday: Fetch data from the previous day only.

- –initial_fetch: Extract data over a wider date range. Note: Using this might result in duplicate records.

4. Monitoring & output:

- The script will output its progress in the terminal, indicating the data fetch’s status and any issues encountered.

- Upon successful execution, the script will save the extracted data to a file named output.csv. This file can be used for manual verification or other processing.

- Additionally, the data will be stored in the designated BigQuery tables, organized by month.

By following these steps, users can efficiently and effectively utilize the script to backfill their GA4 data into BigQuery. Remember to always back up your data and work in a test environment first to avoid any disruptions or data loss.

Built-in ‘BigQuery Linking’ feature in GA4: A closer look

1. Data synchronization limitations:

The native BigQuery integration in GA4, referred to as ‘BigQuery Linking’, begins to synchronize data only from the moment it’s enabled. This means that any historical data prior to the activation date won’t be available in BigQuery. For businesses with extensive historical data in GA4, this poses a significant limitation.

2. The trade-off between granularity and control:

One of the key challenges with the built-in linking feature is the vast amount of data it pushes to BigQuery. While having access to raw data can be advantageous, the lack of control over the granularity of the data synchronized can be overwhelming. Without control over the specific metrics and dimensions transferred, storage costs can increase, and data processing can become more complex.

3. Data comparison challenges:

Given the structure and volume of the data pushed by GA4’s native BigQuery integration, comparing metrics or analyzing trends over time can become challenging. The data format might not always be conducive to straightforward queries, especially if businesses want to compare the new GA4 metrics with their old Universal Analytics data.

4. Traffic source determination issues:

A significant concern reported by users of the built-in BigQuery Linking feature is the difficulty in determining traffic sources. When this data is pushed into BigQuery without clear demarcations or explanations, businesses can face challenges in understanding the origin of their traffic, a critical metric for marketing and sales strategies.

Conclusion

In conclusion, while the ‘BigQuery Linking’ feature in GA4 offers a convenient way to integrate with BigQuery, businesses seeking detailed control, efficient storage, and clearer data interpretations might find it lacking. Custom solutions, like the Python script discussed earlier, can provide the flexibility and granularity required for more refined and insightful data analytics.

Note: As with any data migration tool, it’s essential to thoroughly test the script in a development environment before deploying it in a production setting.

Google Analytics 360: A premium offering for enterprises

For businesses that require advanced analytics capabilities, Google offers Google Analytics 360. Tailored specifically for enterprise clients, GA 360 provides an enhanced suite of features that goes beyond the standard offerings of Google Analytics 4. Pricing for GA 360 is customized to cater to the unique needs of each business, with starting rates hovering around $150,000 annually.

One of the standout features of GA 360 is its data backfilling capability. When a reporting view in Analytics is linked to BigQuery for the first time, GA 360 exports either 13 months of data or 10 billion hits, depending on which limit is reached first. It’s crucial to note that this historical data export is a one-time process for each view. If a view is unlinked and then relinked to a different BigQuery project, GA 360 won’t re-export the historical data for that view.

No-code solutions for bridging Google Analytics 4 and BigQuery

In the evolving landscape of data analytics, there’s a growing demand for solutions that simplify the process of data synchronization without delving deep into technical intricacies. Recognizing this, several platforms have emerged, offering ‘no-code’ solutions to seamlessly connect Google Analytics 4 (GA4) with BigQuery.

Among the notable names in this arena are Supermetrics, windsor.ai, Fivetran, and Snowflake. Each of these platforms brings its unique strengths, ensuring that businesses, irrespective of size or technical prowess, can benefit from streamlined data integration.

Upcoming in the series: “Mastering GA4 with BigQuery”

As the first installment in our series, this article laid the groundwork for extracting GA4 data and loading it into BigQuery using Python. In subsequent parts, we’ll delve deeper into configuring BigQuery for backfilling, integrating visualization tools like Tableau, and automating data synchronization:

Part 1: “Laying the groundwork: Python scripting for GA4 data extraction to BigQuery”

Dive into the initial steps of integrating Google Analytics 4 with BigQuery using Python. Understand the script structure, required configurations, and the flow of data from GA4 to BigQuery.

Part 2: “Configuring the storage: Setting up BigQuery for GA4 backfilling”

Delve into the specifics of preparing BigQuery for seamless data ingestion. We’ll cover dataset configurations, table structures, and best practices to ensure your GA4 data is stored efficiently.

Part 3: “Visualizing insights: Integrating Tableau with BigQuery for GA4 data analysis”

Data is only as good as the insights it provides. In this installment, we’ll walk you through the steps of linking BigQuery with Tableau, turning your raw GA4 data into insightful, actionable visualizations.

Part 4: “Keeping data fresh: Automating GA4 to BigQuery data synchronization”

Your analytics endeavors shouldn’t be hampered by outdated data. We’ll introduce strategies and tools to ensure that your GA4 data in BigQuery is always up-to-date, automating the synchronization process for maximum efficiency.

Analytics is one of the main elements affecting business continuity especially when a company is expanding its product portfolio and when the number of customers increases. See what else is worth paying attention to.